depth of field

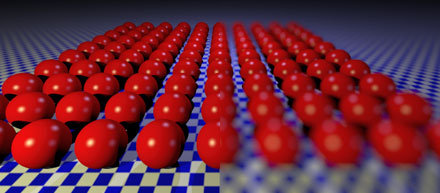

December 4th, 2005 by BramzSome time ago, I mentioned I was implementing depth of field in LiAR. Well, I finally managed to code and test it. The following image illustrates the depth of field effect. The left part is rendered by a pinhole camera, the right part by a camera with a circular lens. You can find the script on the CVS: examples/cameras/depth_of_field.py

Adding depth of field to a ray tracer is rather easy once you’re familiar to the concepts of distributed ray tracing (which isn’t too hard either). First, you generate a primary ray as usual and you determine it’s intersection with the focal plane. This is a (virtual) plane orthogonal to the camera direction and al points on this plane will be in focus. Then you generate a new origin of the primary ray. This is done by picking a random point on the camera “lens”, which is a disk orthogonal to the camera direction and with the camera position as center. The best way to do this is by generating stratified  samples in the range

samples in the range ![\left[0,1\right]\times\left[0,1\right] \left[0,1\right]\times\left[0,1\right]](/wp-content/plugins/latexrender/pictures/8c5b55ab73c77e41fb9e2c4077307461_3.5pt.gif) and to transform them to a disk (Peter Shirley has written a paper on how exactly to do this). Finally, you generate the new ray direction from the point on the lens to the intersection on the focal plane. This makes sure everything on the focal plane is indeed in focus. Voila, that’s it!

and to transform them to a disk (Peter Shirley has written a paper on how exactly to do this). Finally, you generate the new ray direction from the point on the lens to the intersection on the focal plane. This makes sure everything on the focal plane is indeed in focus. Voila, that’s it!